MQTT with Large Payloads and Limited Memory

Why MQTT isn't the optimal choice for large payloads specially when you have a small memory.

Usually, when I write backend code it is aimed to serve a frontend application or mobile app. But lately, I've been working on a project that consists of a backend server and a few tiny, power-limited embedded devices. The goal was to send images to these embedded devices. The request should be as fast as possible since the embedded device has power constraints and should operate the Wi-Fi module minimally.

Going for MQTT ☁️

So the plan is to use MQTT instead of HTTP for the server-client interactions.

Probably MQTT would be better right? After all, it's a lightweight protocol designed to be used with IoT devices. Let's find out!

The embedded device only sends and receives a few messages every 24 hours, so something like 5 messages for 30 seconds each day. That's of course in addition to the only file in this daily interaction which is the image.

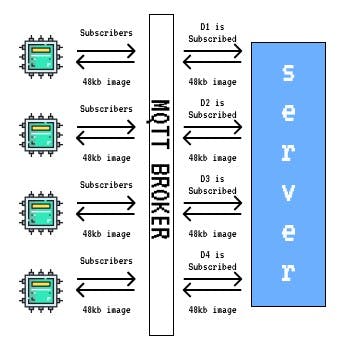

So this graph should explain how stuff should have worked.

When these images were serialized for transmission, their size ballooned to approximately 96kb.

What Went Wrong ⁉️

The embedded device has very limited memory. And so the MQTT client's buffer.

The MQTT client chunked big messages into small messages and that resulted in images that were received by the Embedded device being either missing or corrupted even though it was configured to use QoS of level 2. QoS 2 guarantees that each message should be received exactly once (causes more work on the broker).

The Solution

So as a solution, I tried to divide the image into small chunks but this time on the application layer. so the MQTT client is no longer responsible for chunking.

Each chunk has an extra number that represents the order of the chunk (MQTT might not deliver these chunks in order) which are reordered on the embedded device once it receives it.

And yes that did the trick. that is because when you divide the message into chunks, it becomes the broker's task to manage the message, remember QoS 2? The broker keeps doing R/W operations to track the state of each chunk. It is unlike when one big message is sent, in this case, the MQTT client has to manage the message chunking and because there is no memory to keep up with large data you might get into data loss problems.

Yet Another Solution! - More Memory Required

What if more memory was available? So the thing is, using QoS 2 increases bandwidth usage and overhead to almost comparable to what a SINGLE HTTP request consumes. In a large file context, there would be a lot of overhead since each file is going to be sent twice. Not so scalable when more embedded devices join the scene.

Another way could be using MQTT to address the HTTP endpoint after that the client sends a request to that endpoint, there will be no overhead for establishing a new TCP connection.

Conclusion

MQTT is designed for real-time messaging. You can easily see that many brokers are limited to small sizes such as AWS IoT.

Using only MQTT, chunking large payloads is the best way to go but still going to introduce extra overhead. A better way would go by using a mixture or even only (in case no frequent smaller messages in required) other protocols such as HTTP or FTP.